Bulk Importing StatCounter Logs using SQL Server

I was recently dealing with an issue involving a website that receives hundreds of thousands of visits a day where I needed to track down who (or what!) was hitting particular resources. Lacking any custom tools, my trusted buddy Excel was straining under the load and even having a super-fast machine with 16GB memory provided no relief. Sadly, that was just looking at half a day’s logs so I needed something much more robust. Step aside Excel and make room for SQL Server!

I can’t use that website for my examples, here, so instead I thought I would provide a solution to something similar using the excellent (and free) Stat Counter, which provides statistics on website visits. I (used to) have that for logicalmoon.com and sidcupchessclub.org plus know others make use of it, so figured this might be useful generally. By the way, the website at Stat Counter does provide lots of brilliant ways to view the data, but if you only have the free account, the log files aren’t kept for long (hence this solution).

What You Will Need

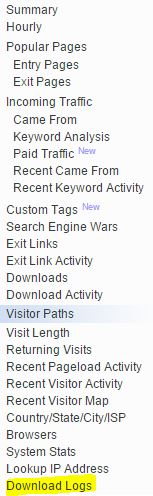

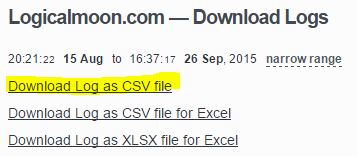

First, download the log file which you can find at:

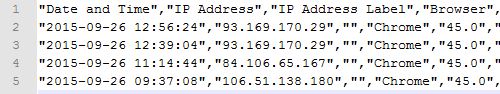

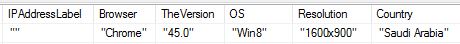

Before taking the first file which looks is:

Opening it, you can see that it’s a bit busy but what you might not notice is that each line is terminated with a New Line (NL) which is represented as ascii 10 or hex 0x0a.

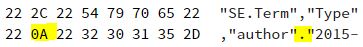

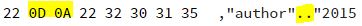

If you take a look at this file in a hex editor (I used the free HexEd.it), you can see the NL highlighted:

The problem with that is that SQL server will be looking for a Carriage Return (CR) as ascii 13, NL combination, not just the NL. Let’s fix that first by opening the file in Notepad++ and doing some magic.

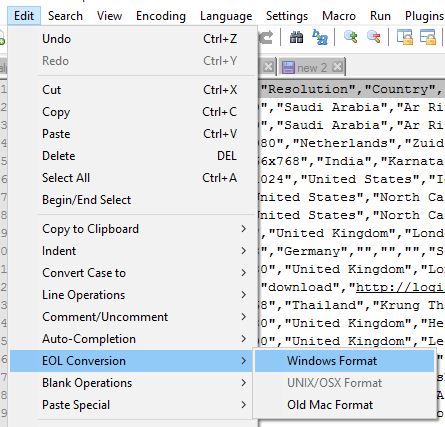

Go to Edit -> EOL Conversion -> Windows Format |

And that will replace all 0x0a with 0x0d 0x0a (CR LF).

Now I want to extract the field names I will use for my table. I could just make it up or type them in but I think I want Notepad++ to work a little harder. I removed the first line and added it to a new file before applying the following changes:

Original Line |

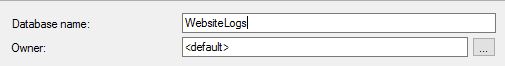

Finally, I saved the original file as a.txt and took the headings before popping over to SQL Server to set up the database and table.

After that I created a table called log to store everything. You will see that I have left the fields as VARCHAR because our text file is still full of quotes and I don’t want to remove those just yet. The concern is that if I do and there are some commas in the row columns, I might cause the import to fail when that field is mapped. I’m not sure it will but I don’t want to chance it right now.

1 | CREATE TABLE [dbo].[log]( |

After that, we want to get the data in. So, I use the very handy BULK INSERT command like so:

1 | BULK |

This is worth a little more explaining to draw out some of the decisions I made. Let’s start with the easy stuff. The FIRSTROW = 2 part means we can skip the heading row and begin with the data, and of course, the ROWTERMINATOR tells SQL Server to expect each line to end in a CR LF. I had tried to use just LF (‘\r‘ or 0x0a) but that didn’t work. What about the FIELDTERMINATOR? For that, my first attempt was to just use a comma but the problem was that that left a whole load of double quotes in my field values.

That’s OK because I could always remove them with something like:

1 | UPDATE LOG |

But then I would have to do that for each and every field. A bit of Googling later and I found this page on MSDN by AmruthaVarshiniJ. He mentions a few solutions, but the one I liked best was a half-way house between what I did and something which removes all of the quotes. As you can see, we’ve set the delimiter to be –","– which removes almost all except the first and last quotes.

OK, let’s make it happen: run the bulk insert and then clean up the first and last columns with this:

1 | UPDATE LOG |

You don’t need the WHERE clause but without it, or something like it, you are altering every row which on a big file will be an even bigger problem! Our data is now in the table, so what can we do it with? How about looking at the operating systems and counts of people accessing our website?

1 | SELECT OS, COUNT(*) |

Or, which countries and how many of each have visited?

1 | SELECT COUNTRY, COUNT(*) |

You could also see which is the most popular page:

1 | SELECT URL, COUNT(*) |

As you can see, this was quite straight-forward and in the future, you wouldn’t need to create the database or table, just import the logs. One thing to watch out for with Stat Counter is that it is a rolling log so you would end up with some duplication. To fix that, you could either edit the parts you don’t need, out, or handle them with some short queries. I hope this has helped someone! Happy stat-ting.

Hi! Did you find this useful or interesting? I have an email list coming soon, but in the meantime, if you ready anything you fancy chatting about, I would love to hear from you. You can contact me here or at stephen ‘at’ logicalmoon.com